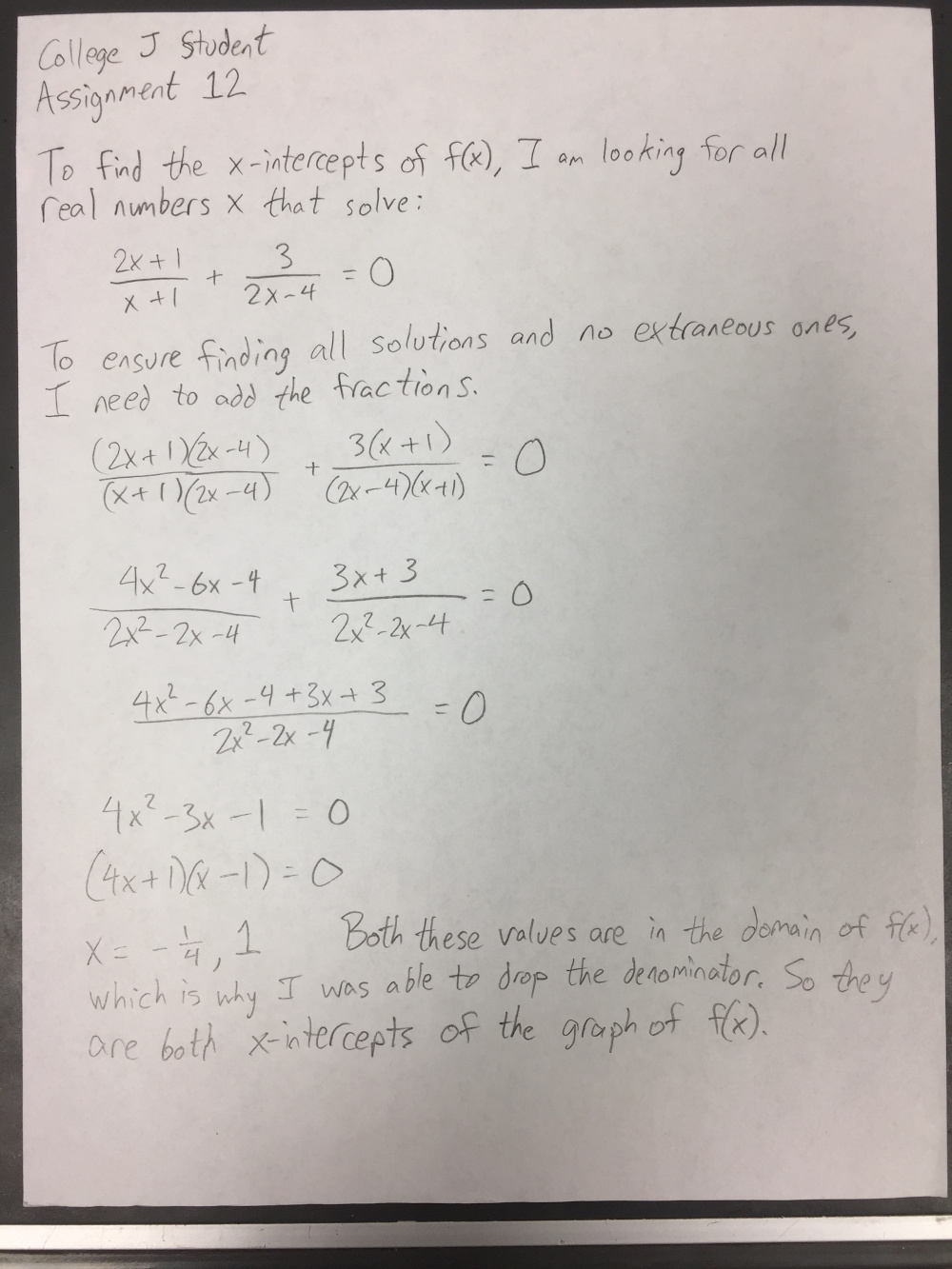

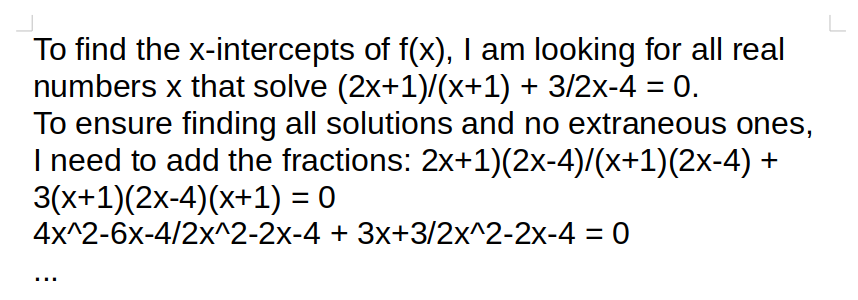

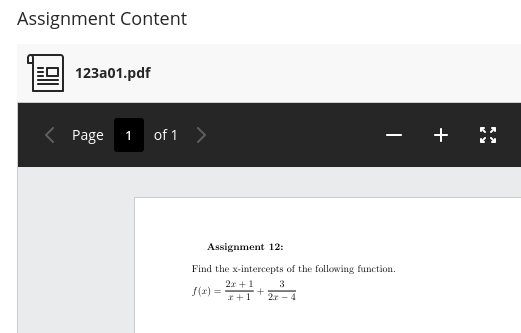

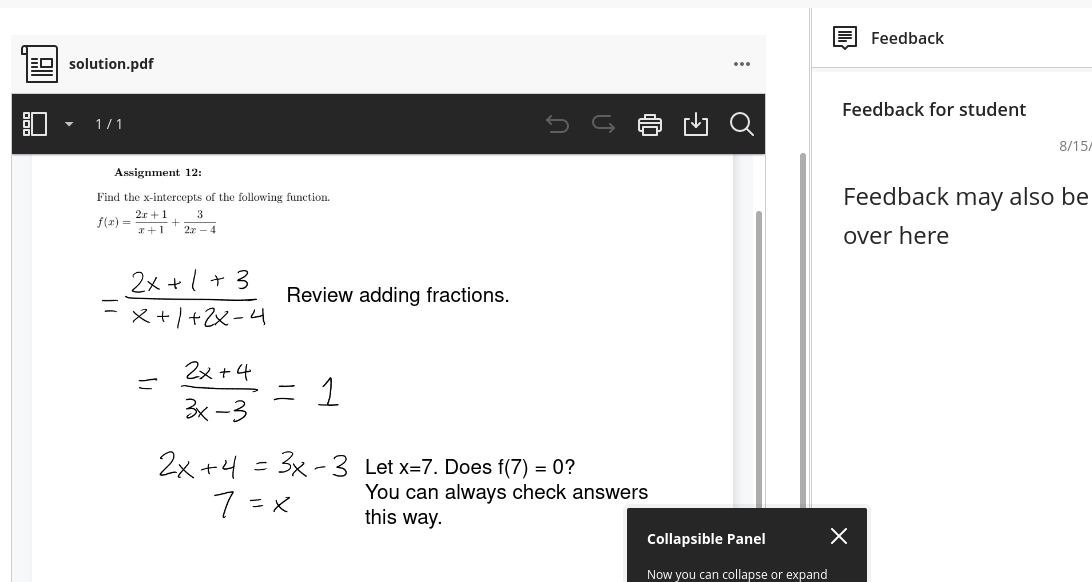

# Written assignments This page will show you the best way to complete and format written assignments in my courses. Suppose we are near the end of an algebra course, and this is the problem statement: |Assignment 12| |---| |Find the x-intercepts of the following function. <br> $f(x) = \dfrac{2x+1}{x+1} + \dfrac{3}{2x-4}$| Your job is to write out a solution that interprets the problem, solves the problem, and interprets the result. I grade on correct answer, correct steps, and clarity. All are important. A solution is either sufficient (10 pts) or needs work (0 pts). ## Content |Evaluation|Work| |---|---| |**Incorrect:** Barely any work. Although it's a correct answer, I have no evidence that the problem was solved by you. Do your own work on written assignments. I grade on correct answer, correct steps, and clarity.|$f(x) = 0$ <br><br> $-\dfrac{1}{4}, 1$| |**Correct:** Correct answer, clear and correct steps. Sufficient balance of work and explanation. Not every step needs to be narrated. In this example, the steps involving simplifying and other algebraic arithmetic, the student already mastered earlier in the course.|To find the x-intercepts of $f(x)$, I am looking for all real numbers x that solve:<br><br> $\dfrac{2x+1}{x+1} + \dfrac{3}{2x-4} = 0$ <br><br> To ensure finding all solutions and no extraneous ones, I need to add the fractions. <br><br> $\dfrac{(2x+1)(2x-4)}{(x+1)(2x-4)} + \dfrac{3(x+1)}{(2x-4)(x+1)} = 0$ <br><br> $\dfrac{4x^2-6x-4}{2x^2-2x-4} + \dfrac{3x+3}{2x^2-2x-4} = 0$ <br><br> $\dfrac{4x^2-6x-4+3x+3}{2x^2-2x-4} = 0$ <br><br> $4x^2-3x-1 = 0$ <br><br> $(4x+1)(x-1) = 0$ <br><br> $x = -\dfrac{1}{4}, 1$ <br><br> Both these x-values are in the domain of $f(x)$, so they are not extraneous solutions. These are both x-intercepts of the graph of $f(x)$.| ## Format |Example|Description| |---|---| ||**Correct:** You can either write or type your assignments. The above solutions are examples of correct typesetting. You can use LaTeX or the equation editor of your word processor, or [online editors such as this one.](https://editor.codecogs.com/) If you remain in the sciences you will be well-served to learn the [LaTeX Markup Language](https://mathvault.ca/latex-guide/). If you write your solution, use pencil and paper so that you can fix small mistakes now and in future attempts. You can scan or photograph it.| ||**Incorrect:** Messy, disorganized. We are in college, so write professionally.| ||**Incorrect:** Blurry, too dark/light, too low resolution, or otherwise unreadable.| ||**Incorrect:** Not a recognized file type. Needs to be an image or document file stored locally on your device and uploaded directly to Blackboard. (Do not send me links to Google Drive, Imgur, Discord or other sites. Blackboard can host the images fine.) Working formats include: pdf, png, jpg, doc, docx. Always preview your uploaded assignment to confirm it shows up.| ||**Incorrect:** Typed but not equation-formatted. PLEASE do not do this! It's a migraine to interpret, and it's prone to ambiguity that can change the entire meaning of your equations.| **Incorrect** uploads will need to be reformatted and/or rewritten, and reuploaded. ## Upload process This was done on a desktop computer. Phones may behave differently or not at all. |Step|Picture| |---|---| | 1. On the blackboard course, open the Assignments folder. Find the assignment you are completing. Click "Start Attempt 1", then open the PDF to see the instructions. (You may need to download it.)|| | 2. When you are ready to upload, drag and drop your file into the submission box. Confirm it looks okay and click Submit. It will warn that you can’t make changes, submit anyway. I allow as many re-attempts as needed. If you click “Save” or “Save draft” but not “Submit”, I will not be able to see or grade your assignment. If days pass and you haven’t gotten a grade, this is usually why. <br><br> If you catch a mistake or upload the wrong file, don't worry. Follow the submission instructions again. I will grade your most recent attempt.| <br> | | 3. After the due date, to view your grade and feedback, open the assignment again and click on Attempt 1 (or the most recent).|| | 4. Feedback can be found directly on the document, or to the right. If you turned in an attempt on time but got a zero, read the feedback and make corrections, and reupload the assignment by following these steps again. (Click the "Start Attempt _" button). If there is a 0 and no feedback, something went wrong. Let me know if this happens and I will fix it.|| That's all that's necessary to complete assignments. If you get stuck, feel free to ask me for help or take advantage of any of the other resources listed in the syllabus. There's one resource in particular I should discuss... # Thoughts on AI > "I've come up with a set of rules that describe our reactions to technologies: > 1. Anything that is in the world when you’re born is normal and ordinary and is just a natural part of the way the world works. > 2. Anything that's invented between when you’re fifteen and thirty-five is new and exciting and revolutionary and you can probably get a career in it. > 3. Anything invented after you're thirty-five is against the natural order of things." > > -Douglas Adams, The Salmon of Doubt: Hitchhiking the Galaxy One Last Time (2002) A lot has been written about AI. I'm going to write some more. I, a human being, put the following words together in this order. This is me, my voice, and my experience. No AI was used in generating or revising any of this text. Recently someone posed as me with the goal of getting into one of my bank accounts. That's how I feel whenever I ask a machine to talk or write or think for me. Ownership, authorship, and original thought will always matter on an existential level. What you and I think and say and write matters, and that's why I assign written work. Because this technology is changing every day, so also my thoughts and this page may change over time. Like any technology, AI carries potential for good and bad, and we must look at the good use cases and determine if they are worth the cost. ## How do we know anything? I grew up in the 1990's, when school tried and failed to get me to go to the library. Then by the 2000's it was taken for granted that "Google knew everything". But it didn't try to answer your question. It was a search engine that only listed some links. You would find what you were looking for on the first page of results, or not at all. If you wanted your product or answer to show up on that first page, you made sure it was good and correct and/or you used SEO (Search engine optimization). Since "AI" has become a marketing term, slapped onto every new product, we need to be more specific about what kind of AI we are dealing with. Most relevant for us are the ones that input and output text, called LLMs (Large language models). LLMs do a "good" job of generating text depending on the genre or criteria, such as writing funny poems. But I observe more and more people consulting and relying on LLMs for facts. LLMs fundamentally operate by asking repeatedly "based on my training and based on the last x words, which word is most likely to come next?" and then writing that word. (A prompt is part of those words.) 3Blue1Brown has [a brief video](https://www.youtube.com/watch?v=LPZh9BOjkQs) and a [whole series](https://www.youtube.com/playlist?list=PLZHQObOWTQDNU6R1_67000Dx_ZCJB-3pi) explaining LLMs. Chapter 7 makes the case that they can "store" and retrieve a surprising number of facts. We should be cautious about drawing any conclusions about how reality, logic, or our brains work based on this. We invented the LLM, not the other way around. Philosophers can debate this, but I don't think humans think like an LLM. Or at least, not merely. [(And it could be a very bad sign if we did.)](https://neurosciencenews.com/ai-aphasia-llms-28956/) We do string together words by an unconscious set of rules, but there is something else, a person, guiding our words besides just the mechanics of grammar and syntax, or following the prompt we've been told to follow. We learn the mechanics, but with the goal of being able to communicate what's already in our minds that much more clearly. If there's any doubt about the LLM's accuracy, we could use a search engine along with the LLM. The problem is that now the web is flooded with LLM-generated copy designed to dominate at SEO only, not truth or clarity. This makes Google and all other search engines just about useless, along with future LLM models that train on this data! And there is no algorithm to determine whether a piece of text was or wasn't written by a machine. We have to rely on our spider-sense, and I might have to frequent the library more. The question you should ask about anything an LLM says is "How do I know that this is correct?" Even disclaimers at the bottom admit that the LLM can make mistakes. But then we're back where we started: not knowing with certainty! We might actually be further away from the truth, since certain LLMs have demonstrated bias based on who owns them and what they're trained on. Someone with enough money and influence could generate enough instances of a lie on the web that any model trained on the web begins to present it as truth. But they don't even need to do that. Even before AI, it has been the case that if you say the right words, [the internet will tell you exactly what you want to hear.](./facts.png) You might be thinking my distrust is misplaced. LLMs are good enough for low-stakes tasks like giving recommendations for movies, or ideas for a party. But regardless of the task, there's something else we should consider: the literal cost. ## Processing power [Saying please and thank you to ChatGPT costs millions of dollars, CEO says - USA Today](https://www.usatoday.com/story/tech/2025/04/22/please-thank-you-chatgpt-openai-energy-costs/83207447007/) [America's largest power grid is struggling to meet demand from AI - Reuters](https://www.reuters.com/sustainability/boards-policy-regulation/americas-largest-power-grid-is-struggling-meet-demand-ai-2025-07-09/) [What do Google's AI answers cost the environment? - Scientific American](https://www.scientificamerican.com/article/what-do-googles-ai-answers-cost-the-environment/) Out of curiosity I tried running an LLM locally on my home computer using GPT4All, a frontend for hundreds of different free LLM models, the simplest of which took up 4 GB of disk space. I had 16 GB of RAM which is sufficient for most modern video games (at least around 2020). I gave the LLM a simple prompt and it swiftly consumed all my RAM and crashed my computer. I upgraded to 64GB RAM, more than I will ever need, and was able to play with it for a while, but at every prompt my machine would freeze for about 15 seconds before generating. The results were always subpar and I couldn't help but think that I was rapidly eating up the lifespan of the parts of my computer. I figured it was better to use remote services on machines that are designed to handle it. I eventually found some more lightweight programs and models, and with time we may streamline and make the process more cost effective, but this gives you an idea of just how much processing power is consumed with each prompt sent. [Even ChatGPT agrees it's expensive.](https://chatgpt.com/share/6899ffaf-6390-800d-9bbd-37088fc5de61) Somebody has to pay for the hardware and electricity, and the service wants you to be that person, and would love it if you became dependent on it. We are conditioned for instant gratification. We want answers NOW, even if it ends up costing money, one time or subscription (and they are invariably harvesting the data you trust them with. As the old saying goes, "If it's free, you're the product." For security's sake, never enter sensitive, personally identifiable information into an AI.) It comes back to the question of necessity. I have reached for AI to answer questions (such as technical or coding questions) which, if I had taken a moment to think, I would have known where to look to get quick, direct, free answers (such as the online documentation.) I do not want to participate in a waste of money or electricity, even if it's one that's out of sight and out of mind. Always ask yourself, do you really **need** AI for this? ## Winning at chess without knowing the rules My main focus here is on how AI applies to math and education. I require written assignments as a component of all my classes, because an overlooked aspect of mathematics is good communication. You are arguing a case. You are walking through your thought process in order to prove not just some result, but that you know what you're doing. (For my part, I write all my own lessons, problems, tests, and keys. I do not use AI to write or grade any class content. Additionally, in all my classes, tests are in-person, paper-and-pencil, no technology allowed. One advantage to you is that this is equitable. Nobody has an edge over anyone else due to having a subscription to a better service.) Ideally, school teaches us how to be self-motivated learners, but everyone including me has felt like taking shortcuts and avoiding learning. I have to stand in the way of these shortcuts. In the past, students would copy each other's work, go to Chegg or other online "tutors" who would do the work for you, or enter problems into automatic solvers like Symbolab which would generate convoluted and difficult-to-follow solutions. Now LLMs can write whole essays that are even more convoluted and prone to additional errors. All of these shortcuts remind me of a way to cheat at chess, where you start two simultaneous games with two different players of as high a skill level as you want. You play one game as white and the other as black. Whatever move the first opponent as white plays, you make that same move in the other game. Whatever reply the opponent as black makes, you make that reply in the other game, and so on. If you don't make any mistakes, you will win one game and lose the other, or draw both games. _And you can do this without knowing anything about the rules of chess._ It may look impressive, but you are reducing yourself to a postal service, and you'll get crushed in an honest game. This is what it is like to take a problem I assign, feed it to an LLM, and pass off its output as your own and send it to me. Usually the work will need corrections. Now imagine taking my feedback, like "there's an error on this line", and reopening the LLM and asking it to revise its work. Will it detect its own error? And if it does, why didn't it give the right answers initially? And how do we know it checked its own work correctly? Could it "find" an error that wasn't there if the user insists hard enough that there is one? The LLM's job is to be helpful, after all. In math we often want to avoid having to interpret a verbal problem. Some of us feel better about plain symbol manipulation, me included. But machines can do that! The human part of problem solving is in the interpretation of the question and the answer. Often when I ask a math problem to an LLM, it will very confidently charge ahead after blatantly dropping or swapping numbers or variables. They are limited in memory as well; talk to a LLM for long enough and it forgets what was said earlier, leading to lapses in reasoning. Some problems are involved enough that this will invariably occur. But even if it gets it right, **the biggest reason you shouldn't feed problems to an LLM is that your brain does not do any thinking.** Imagine asking someone else to lift weights for you and thinking it will make you stronger. ## Good shortcuts One of my professors was fond of saying "The best mathematicians are lazy". Meaning, they are always looking for the solution that takes the least work. I said this for a while but I find myself having to qualify it more and more with time. Yes, math was designed to make our lives easier, and it can, as long as we learn it properly. Rote learning has a place. Learning multiplication tables and the algorithms for adding and multiplying 2- and 3- digit numbers feel like drudgery at first experience, but "automating" these in our minds frees our memory and processing for greater tasks. When it comes to multiplication of 10-digit by 10-digit numbers, our instinct rightly says this is best entrusted to a basic calculator app. In lectures I always try to point out when I make this decision. But we wouldn't (shouldn't) use an LLM for this, it's overkill. One of my goals is to show how to choose and use the best tool for the job. Along with our pocket calculator, AI is a tool. But like all tools, it is only as smart as the user. The best calculator in the world is useless in the hands of one who doesn't know how it works. We've already seen the basics, but if you dive in further and learn more of the math involved (it's a lot of linear algebra), you will understand exactly how AI works and what its limitations are. With enough work, you will even be able to create your own models to assist you in specific goals. Terence Tao has written extensively on positive use cases for AI, even at the highest levels of mathematical research, [at his blog](https://terrytao.wordpress.com/mastodon-posts/). I invite you to class because **I want to show you the easy way**! I'm decades familiar with this content. I've learned a dozen different ways to express concepts and solve problems, and I want to point you in the direction of the best ones. More than that, I want to teach you how to learn. At this point in education you may have heard that you "need to take responsibility for your own learning". This is now a matter of self-defense. AI is no longer just a temptation, it is being forced upon you. We reach for a search engine for some resources, but they have all turned into answer engines that do all the work for us. As an independent learner, you should recognize how harmful this is to you! For instance, if you are trying to use spaced repetition to remember facts, the worst thing that can happen is seeing the answer before you've had time to search your memory for it. So I'm not convinced of AI's usefulness as a teaching/learning tool yet. [Study](https://pmc.ncbi.nlm.nih.gov/articles/PMC11020077/) after [study](https://arxiv.org/pdf/2506.08872v1) after [study](https://papers.ssrn.com/sol3/papers.cfm?abstract_id=4895486) shows that AI's effects on learning are overwhelmingly negative. Specific concepts taught with clarity can only be found in a textbook, a demonstration, or anything with a **person** as the crucial ingredient. But even if there were a perfect LLM teacher, the best teacher and best explanations in the world mean nothing if they don't translate into you engaging your brain and training yourself at new skills. And as for 99% of use cases, AI is completely unnecessary for that. It's an exciting time to be alive. Technology is incredible. Use it responsibly. Use it for good.